Category: Uncategorised

-

How AI Systems Can Be Improved

Current large language models resemble Oracles: they sit passively, waiting for input, respond once, and return to a dormant state. In contrast, a substitute employee model would proactively work continuously on assigned tasks, operating 24×7. Such an AI must be able to use a PC, read and respond to emails, consult manuals, and learn to operate arbitrary software. It should possess a continuous, persistent memory and think about problems over time, not just react to prompts. Importantly, it must understand and apply complex memory hierarchies—distinguishing between personal and corporate data, and ensuring information relevant to one client is never disclosed to another. Memory is implemented as a form of lifelong learning. To avoid each instance requiring a full copy of the neural network, learning occurs in a sparse shadow copy. This approach allows new weights to consume minimal physical memory while producing effects equivalent to directly modifying the main neural network.A more appropriate model is akin to a robot or a car—systems that are inherently multi-sensory and multi-functional. Each component is specialised for a particular function yet depends on the global state of the system. Such architectures are modular but tightly integrated, allowing for flexible and context-sensitive behaviour. No single component should operate in isolation. This area is already being driven by the requirement for advanced humanoid robots based on various forms of neural network integrated to achieve a fast, uniform response.Current language models operate primarily at the surface level of text. However, each sentence, paragraph, or document encodes a deeper, structured representation of meaning. Language-based cognition exhibits internal architecture: semantic roles, abstract picture producers, and constrained sets of action words and modifiers. AI systems should extract and work with these underlying structures rather than merely sequence words. This enables a form of attention grounded in meaning, rather than token co-occurrence. At higher levels, this facilitates recognition of plans, plots, and goal-directed narratives. Predicate logic is one approach to representation, but it is too rigid to capture the structure of certain forms of text. My doctoral thesis investigates an alternative solution based on the conceptual deep structure proposed by Roger Schank.That is, they process information as a linear stream of tokens (e.g., text input), with little understanding of the spatial, temporal, or functional relationships found in the real world. In contrast, human cognition operates in a three-dimensional environment, particularly through stereo-visual and sensorimotor input. A richer understanding of complex physical systems requires awareness of relative position, form, and dynamic function—not merely linguistic descriptions.The real world presents competing objectives that must be balanced dynamically. AI must learn to prioritise, defer, or resolve these goals appropriately. This is essential to avoid simplistic utility-maximisation behaviours such as the “paperclip problem,” where a single goal is pursued to pathological extremes.Not because IIT is a proven model of consciousness—consciousness remains elusive—but because IIT’s emphasis on high Phi (a measure of integrated complexity) offers a useful heuristic for system design. A high-functioning AI system should consist of specialised parts that are tightly and reciprocally integrated. These parts must depend entirely on one another and not operate autonomously. Feedforward architectures, though capable of intelligent-seeming behaviour, are fundamentally limited. They are appropriate for Oracle-style systems but inadequate for integrated, deliberative, and complex thought. -

Do LLMs understand their output?

You will often read statements to the effect “LLMs do not understand what they produce as output”. My controversial view is that, in a way, we do not understand what we are saying. Consider this. When we speak words flow from us in a stream without ratiocination, that is, straight from our neural network. If stopped and asked to justify what we have said we are able to create a plausible answer. We believe we understand in the sense of always being able to justify our statements and answer questions about the topic and we can test if someone really understands a subject using a viva voce.

We believe we have a logical conceptual model of the world from which our statements flow but we actually have an impenetrable neural network. I maintain that our accessible mental models are a thin veneer of conceptual deep structures but the real work is done by an inaccessible neural network. This means that at some level our explanations and justifications are illusory, they are constructed post hoc. In the same way, LLMs can, and will become better at, explaining their output and they thus demonstrate their understanding. If an LLM can pass a doctorate level viva voce then can we plausibly argue that it does not really understand the subject?

I am not arguing that LLMs are a model of our neural structures, far from it. They are potentially much more powerful as we have many well-known limitations, our short-term memory is tiny, our ability to analyse deeply structured logic is very limited and our speed of thought is slow. I believe it is a mistake to argue that LLMs are not conscious, are not sentient and do not have feelings and emotions. All this may or may not be true but it is irrelevant to everyone except philosophers. The key questions is are they useful and beyond that can they perform at a level that exceeds any human. If so, we have a very useful tool, to say the least, that will amplify all that is good and all that is bad about our human species. -

Improving Large Language Models

The rules of syntax are well known but the rules of semantics (beyond predicate calculus) are not and this limits the capability of Large Language Models (LLMs). My PhD thesis, “A Conversation Problem Solving System Represented as Procedures with Natural Language Conceptual Structure” (www.shafe.co.uk/wp-content/uploads/PhD-Thesis-A-Conversational-Problem-Solving-System.pdf) attempted to approach this problem. It was based on the early work of Roger Schank (en.wikipedia.org/wiki/Roger_Schank) on “Primitive Concepts Underlying Verbs of Thought” (apps.dtic.mil/sti/tr/pdf/AD0744634.pdf) explained more fully in Chapter 5 of “Computer Models of Thought and Language”(ed. R. Schank, 1973, W.H. Freeman).

The interesting aspect of his paper is that he reduced the number of verbs to about 10 (later increased to seventeen). This is profound as it results in a semantic grammar that represents the underlying meaning of a statement. Research into this area progressed slowly over the years and neural networks took centre stage. Now seems to be the time to combine the best of both approaches.

My idea is to process the input during tokenisation and produce a semantic set of tokens, à la Schank, with each verb expanded into its appropriate linear semantic structure. The neural network weights would then be adjusted based on the meaning rather than the text and the system could be said to understand what it was outputting.

Note that nouns can be handled using, for example, Word2Vec, but similar verbs are not close in a multi-dimensional space because they require a complex structure to represent their meaning. For example, “I took the money” and “I gave the money” are, according to Schank, something like “I TRANS money from place1 to myself” and “I TRANS money from myself to place2”. By explicating verbs we reveal their underlying structure and meaning and if we feed that into the neural network then it should be faster to learn and better ‘understand’ the data and so produce better outputs.

I asked ChatGPT, “Human understanding partly requires a knowledge of the way verbs change the world. If all verbs could be processed into a structure that represented such knowledge then LLMs would learn more quickly and have a better understanding of the world. Roger Schank proposed such a structure in his paper “Primitive Concepts Underlying Verbs of Thought”.”

It replied, “You’re right about the importance of understanding how verbs can change or reflect states in the world, and Roger Schank’s work in conceptual dependency theory addresses this aspect. In his paper “Primitive Concepts Underlying Verbs of Thought,” Schank proposes a structure for categorizing and understanding verbs, especially those related to cognitive processes, in a more systematic and meaningful way.

Schank’s approach involves breaking down verbs into primitive actions or concepts. This methodology aims to represent the essential meaning of verbs in a simplified, universal form. By doing so, it becomes easier for a system (like a language model or an AI) to understand and process the implications and nuances of different actions or states described by verbs.

Incorporating such structured knowledge into the training of Large Language Models (LLMs) could indeed enhance their understanding of language and the world. By having a more nuanced grasp of how verbs signify changes or states, LLMs could make more accurate inferences and generate more contextually appropriate responses.

However, implementing this approach in LLMs would require a significant amount of structured data and sophisticated algorithms to integrate this structured understanding of verbs with the model’s broader language understanding capabilities. It’s a challenging task but one that could lead to significant advancements in the field of natural language understanding.

Schank’s work is a pivotal contribution to the field of AI and language understanding, highlighting the importance of deep semantic processing in achieving more human-like understanding in machines.”

I believe the task is challenging but straightforward as the number of verbs in the LLM’s dictionary is finite and each one has fixed linear semantic structure that can replace it. The complication is that the structure will contain placeholders that must be filled by carrying out a syntactic analysis of the sentence. -

Ancient Egyptian Art

The ancient Egyptian civilisation lasted for over 3,000 years. In fact, we are today nearer in time to Cleopatra than she was the the building of the pyramids. The enormous timescale can be subdivided into the:- Early Dynastic period (c. 3150-2613 BCE)

- Old Kingdom (c. 2613-2181 BCE)

- First Intermediate Period (2181-2040 BCE)

- Middle Kingdom (2040-1782 BCE)

- Second Intermediate Period (c. 1782-1570 BCE)

- New Kingdom (c. 1570-1069 BCE)

- Third Intermediate Period (c. 1069-525 BCE)

- Late Period (525-332 BCE)

- Ptolemaic Period (323-30 BCE)

The commemorative slab shown below is from the Middle Kingdom shows a son on the right bringing food and drink to his parents Khety and Henet on the left. The surprising thing to us is that this is tomb art. His parents are dead, so why is he bringing the food and drink including a massive calf’s leg that he is holding out to them. Most ancient Egyptian wall art is found in tombs and was never designed to be seen by the living. It is there to assist the dead to enter paradise, known as the Field of Reeds. When you consider that they thought a person consisted of multiple parts that had to be assisted into the world of the gods then the complexity is easy to understand. The Book of the Dead consisted of spells and procedures to assist the journey which involved the weighing of the heart by Anubis, the jackal-headed god. If the person had lived a decent life and their heart weighed less then the feather of Matt, the goddess of truth and justice, then they were worthy to live forever in paradise with Osiris.For more information on this mysterious and intriguing period my YouTube video on ancient Egyptian art is here: -

William Beechey, ‘Portrait of Sir Francis Ford’s Children Giving a Coin to a Beggar Boy’, exhibited 1793

Sir William Beechey (1753-1839), Portrait of Sir Francis Ford’s Children Giving a Coin to a Beggar Boy, exhibited 1793 The 1790s were a time when society and the arts debated the relative merits of good sense and reason as opposed to sensibility. ‘Sensibility’ was a heightened awareness of beauty and deep feelings. Jane Austen’s Sense and Sensibility was published in 1811 and was set in the same decade as this painting. We see this heightened sense of feeling takes the form of a fashionably dressed girl and her brother handing a coin to a hunched over beggar. Such emotional scenes of charity had become fashionable at this time but there might be another justification for this scene.

Pro-Slavery Propaganda?

The artist, William Beechey, did not identify the children when he exhibited the work but they were recognised by Horace Walpole, son of the first British prime Minister, Robert Walpole. They are Francis Ford and his sister Mary, the children of Sir Francis Ford, who owned a sugar plantation in Barbados and a property in Ember Court, Thames Ditton, Surrey.

While William Wilberforce was fighting to stop the British slave trade there were many wealthy plantation owners, like Ford, who were arguing that their slaves enjoyed a better quality of life than the poor in England. It is possible therefore that he commissioned this painting not just to show the refinement and sensibility of his children but as pro-slavery propaganda, to show how his family cared for both the poor in England and his slaves in Barbados. Although not connected, Ford fell ill the year this painting was exhibited and died in 1801 after eight years of suffering from ‘a severe and painful illness’.

Finally, following a twenty-year campaign for abolition Parliament passed the Slave Trade Act in 1807. Many believed this would end slavery but it only slowed the trade and slavery continued for a further twenty-six years. The Royal Navy enforced the ban on the slave trade and between 1808 and 1860 they seized approximately 1,600 slave ships and freed 150,000 Africans who were aboard.

William Beechey

William Beechey was a sound artist known for his lack of extravagance which appealed to those like King George III, who regarded other artists, such as Thomas Lawrence, as too flamboyant and ‘eccentric’. When this painting was exhibited the well-known portrait painters Thomas Gainsborough and his rival Sir Joshua Reynolds had both died and the year was a turning point in Beechey’s life and career. He painted a full-length portrait of Queen Charlotte who appointed him her official portrait painter and he was elected an associate of the Royal Academy. He went on to paint not only the royal family but most of the famous and fashionable of the period. The up-and-coming portrait painter was Thomas Lawrence who also painted Queen Charlotte but she did not like the picture. Lawrence’s flamboyant approach appealed to many including the Prince Regent but Beechey remained the favourite of George III and Queen Charlotte while Lawrence went on to become the leading portrait artist of the period.

References

-

Thomas Gainsborough, ‘Giovanna Baccelli’, 1782

Thomas Gainsborough (1727-1788), ‘Giovanna Baccelli’, exhibited 1782, photo: © Tate, London, 2017 If you have visited Knole House near Sevenoaks, Kent, then you might have noticed a nude woman reclining on a chaise longue at the bottom of the stairs. This is a sculpture of Giovanna Baccelli by Giovanni Battista Locatelli. Baccelli was principal ballerina at the King’s Theatre Haymarket and mistress of John Frederick Sackville, 3rd Duke of Dorset, the owner of Knole. He was a handsome, extravagant man with a string of mistresses but he thought highly of Baccelli and set her up in a suite of rooms at Knole. A few years later he commissioned Thomas Gainsborough to paint her full-length in a dynamic pose with the costume and heavy make-up she wore for one of her roles. When the painting was exhibited at the Royal Academy in 1782 an embarrassing incident was narrowly avoided. Gainsborough had also painted the Duke and the picture was going to be shown at the same exhibition. To exhibit a Duke alongside his mistress would have been a flagrant breach of decorum and the Duke’s portrait was withdrawn, we presume for that reason.

When the Duke was appointed ambassador to France, Baccelli accompanied him to Paris and she was a great success as a ballet dancer at the Paris Opéra. It is said that when he was awarded the Order of the Garter she performed with the blue ribbon of the Garter tied around her head. They became friends of Queen Marie-Antoinette but as the French Revolution unfolded they returned to Knole. They separated the same year but remained friends and the Duke married a respectable heiress with a dowry of £140,000. According to an inventory of Knole in 1799, the statue of Baccelli was rechristened ‘A Naked Venus’ and moved to a less prominent position and she was given an annual pension of £400 and left her son to be brought up by the Duke as a gentleman.

The painting by Gainsborough is now at Tate Britain and is a wonderful example of his mature style with its lightly flicked brushstrokes and immediate sense of delicate animation. Accounts at the time describe her as charming rather than beautiful and her character was thought to have been captured by Gainsborough. As one contemporary critic wrote, the portrait was, ‘as the Original, light airy and elegant’.

References

http://www.nationaltrustcollections.org.uk/object/130059

http://www.tate.org.uk/art/artworks/gainsborough-giovanna-baccelli-t02000 -

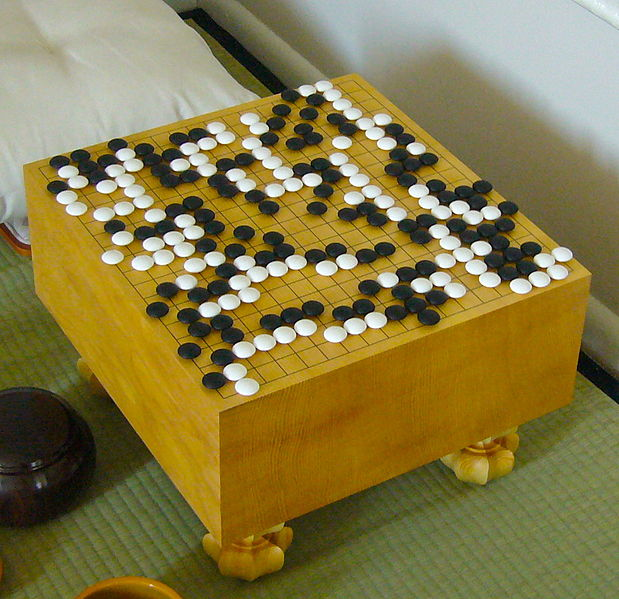

Artificial Intelligence and Go

Go is the oldest game in existence, it was invented in China 2,500 years ago. The rules are simple but the strategic thinking required to play well led many people to claim that a world-class human player would never be beaten by a computer. However, in 2016 one of the best Go players in the world was beaten by a computer program called AlphaGo. AlphaGo is an example of Artificial Intelligence (AI).

The Approach

The number of possible Go games is so vast that the number of atoms in the universe and the age of the universe look tiny in comparison. Therefore, for a computer to learn to play through brute force, that is by examining millions of games, seems pointless as countless trillions of games are less than a pin-prick in the vast universe of possible games. Yet, that is exactly what AlphaGo did, it examined thousands of games played by humans and then played millions of games against itself and from that blind analysis, without any strategic thinking, it achieved a level of play beyond any human player.

Deep Mind

AlphaGo was produced by a company in London called Deep Mind, which was acquired by Google in 2010 and is now part of the Alphabet group. The latest version of AlphaGo is called AlphaGo Zero and it takes the program to a new level of capability. AlphaGo Zero started with just the rules and no prior knowledge of the game. It did not analyse any human games and only played itself yet within three hours it played like a human beginner, within 19 hours like a human expert and within 70 hours it surpassed all human players, and it never stops learning.

The next step is to apply the same approach to solving other complex problems, such as finding new drugs, diagnosing diseases, reducing energy consumption or searching for revolutionary new materials. We can only speculate about its ability to solve other problems such as weather forecasting, economic forecasting, predicting human behaviour and reading human thoughts. The latest AI research work at Google has found a way to use the same mechanism to solve many types of problem and to combine different problem solvers in order to tackle a wide variety of problems.

The Problem with Neural Nets

AlphaGo Zero and most of the other recent artificial intelligence (AI) systems are based on neural nets and suffer from one major problem, they are unable to explain their actions. Neural nets are impenetrable, like the human brain. Why do I say, ‘like the human brain’ when our thoughts appear open to us. When we are asked ‘why’ we can give a considered response that explains our reasoning. However, is this what is happening? Back in 1976 in my PhD thesis I speculated that there are two mechanisms at work. There is an underlying brain machine that analyses our environment, controls our body and makes decisions and there is a separate but integrated language system that believes it is in control and uses language to construct reasons why the other part of our brain has done something. The combination can model the world and forecast the future better than the brain system on its own. When we are asked ‘why’ it is our language system that constructs a set of reasons to explain what we have done or said.

Free Will

As a footnote, you may be wondering about free will. Well, that depends on what you mean. If you mean the ability to make decisions and control one’s behaviour without constraint and in response to reason then that can be achieved through the combination of the brain machine and the language system. However, generally our actions and decisions are constrained by our unique genetic makeup and the circumstances of our upbringing but this does not free us from responsibility or remove the appropriateness of praise or blame. We take what we consider to be the best action in all the circumstances of our individual makeup. So we are predisposed free agents, as is AlphaGo Zero.

A Go Board See The Economist, 21 October 2017, pp. 80-81 and New Scientist, 21 October 2017, p. 9.

-

‘A Stroll Through Tate Britain’ extended

The Hampton course ‘A Stroll Through Tate Britain’ has been extended by a week to 6 December 2017. Apologies but I had to cancel the class on 11 October because of a slipped disk.

-

Bruce Nauman

I just gave my first tour of the Bruce Nauman gallery at Tate Modern. The tour went well and my guide notes can be found at http://wp.me/P9iOZ6-2id